I am currently a third-year PhD student in computer science at the University of Massachusetts Amherst, working with Prof. Chuang Gan. Previously, I was an undergraduate at Zhejiang University and the University of Illinois Urbana-Champaign.

My research interest lies in foundation models and its efficiency.

🔥 News

- 2025.05 CommVQ is accepted by ICML2025.

- 2024.08 FlexAttention is accepted by ECCV2024. Check our Project Page and also GitHub

- 2024.01 CoVLM is accepted by ICLR2024. Check our Project Page and also GitHub

.

- 2023.07 EfficientViT is accepted by ICCV2023. Check it on GitHub

.

- 2023.06 ToP is accepted by KDD2023. Check it on GitHub

.

- 2023.03 ProxylessGaze is publicly available as an application of ProxylessNAS

. It is an open-source gaze estimation pipeline including face detection, facial landmark detection and gaze estimation, running in real time on Raspberry Pi 4, Qualcomm GPU and Intel CPU.

📝 Publications

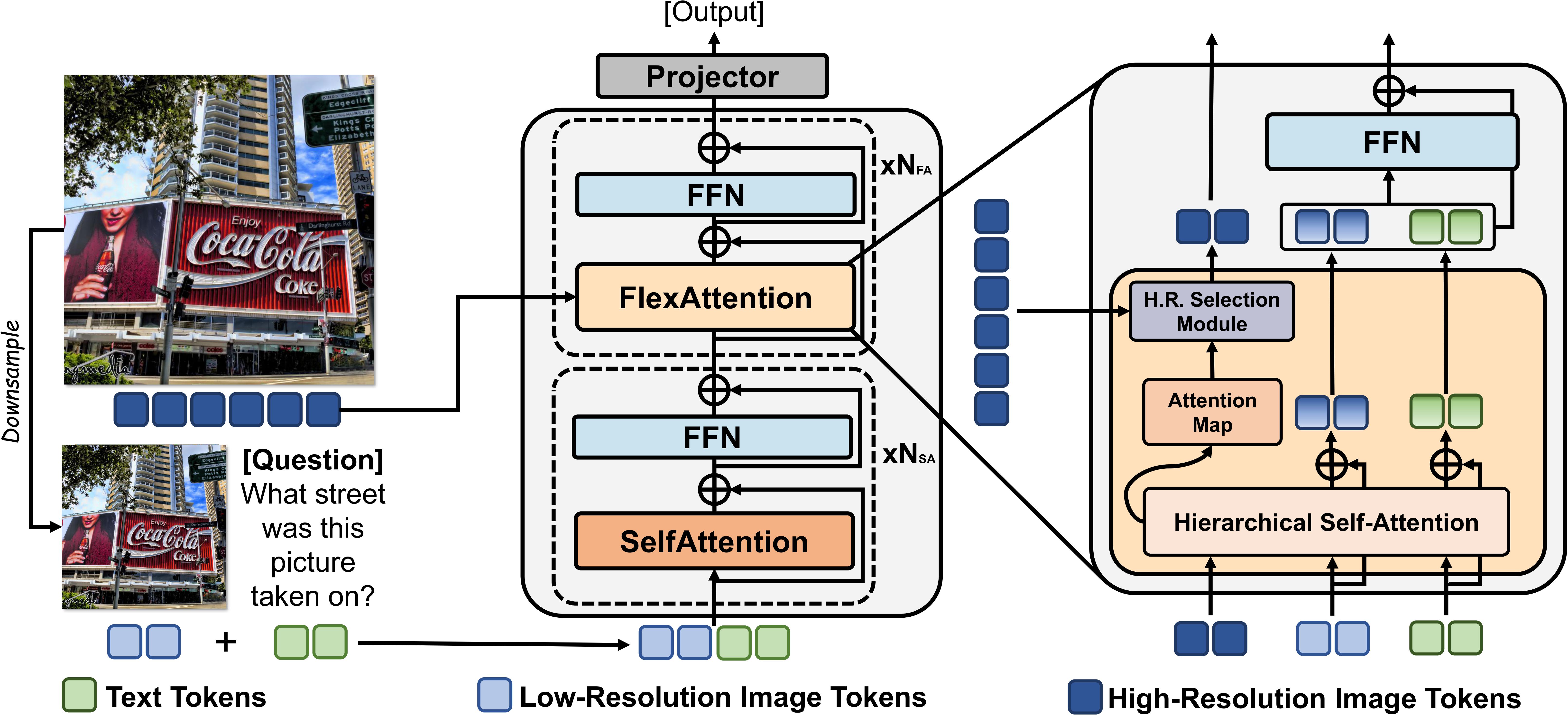

FlexAttention for Efficient High-Resolution Vision-Language Models

Junyan Li, Delin Chen, Tianle Cai, Peihao Chen, Yining Hong, Zhenfang Chen, Yikang Shen, Chuang Gan

GitHub  |

Project Page |

- FlexAttention is a plug-and-play attention module that can enhance VLMs’ ability to perceive details in high resolution image in an efficient way.

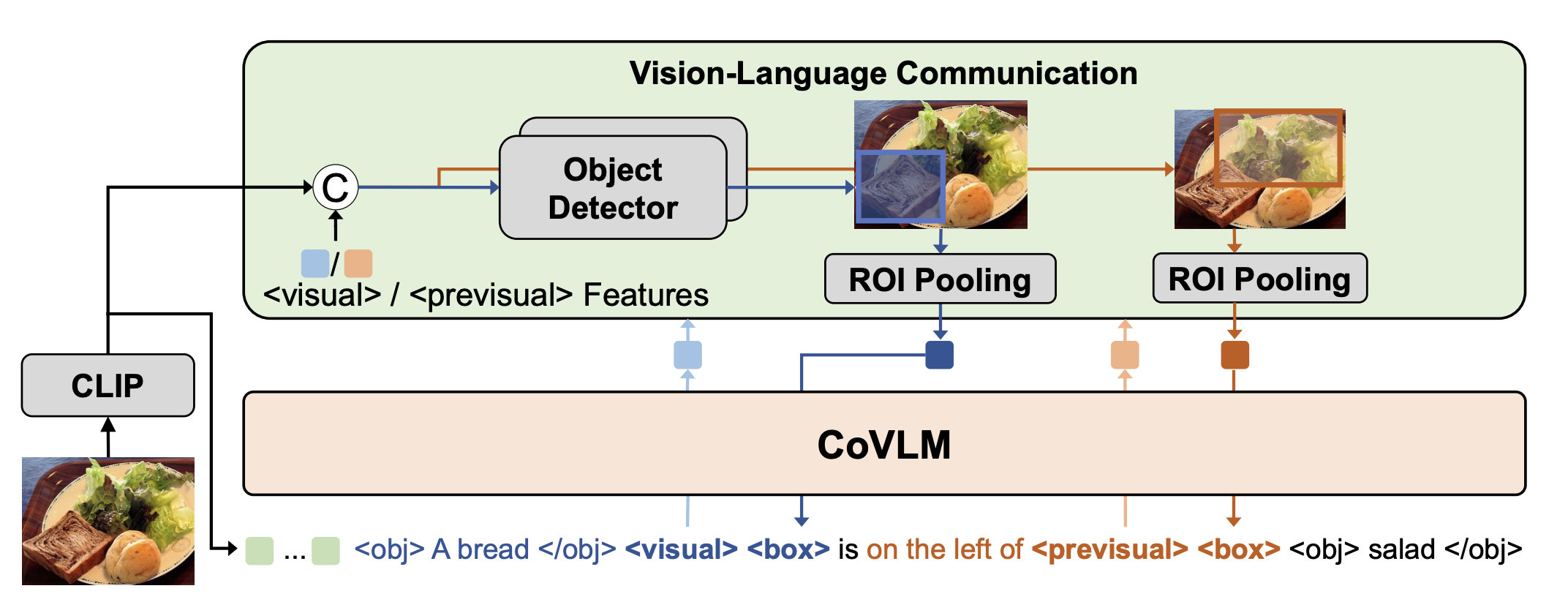

Junyan Li, Delin Chen, Yining Hong, Zhenfang Chen, Peihao Chen, Yikang Shen, Chuang Gan

GitHub  |

Project Page |

- CoVLM is specifically designed to guide the VLM to explicitly compose visual entities and relationships among the text and dynamically communicate with the vision encoder and detection network to achieve vision-language communicative decoding. It boosts the compositional reasoning ability of VLMs and achieve SoTA performance on various tasks involving compositional analysis.

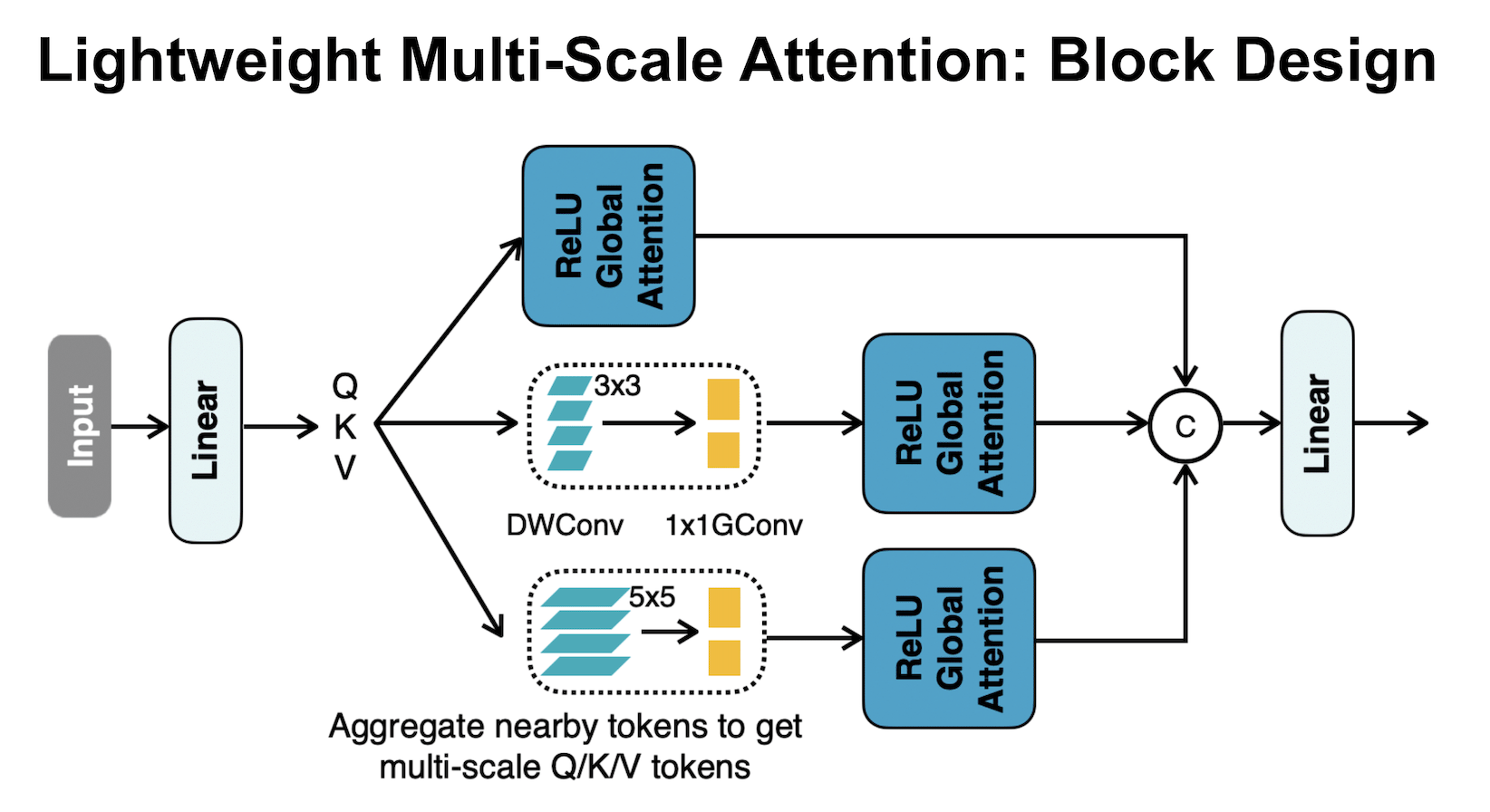

EfficientViT: Lightweight Multi-Scale Attention for On-Device Semantic Segmentation

Han Cai, Junyan Li, Muyan Hu, Chuang Gan, Song Han

GitHub  |

Poster |

- EfficientViT is a new family of vision models for efficient high-resolution vision, especially segmentation. The core building block of EfficientViT is a new lightweight multi-scale attention module that achieves global receptive field and multi-scale learning with only hardware-efficient operations.

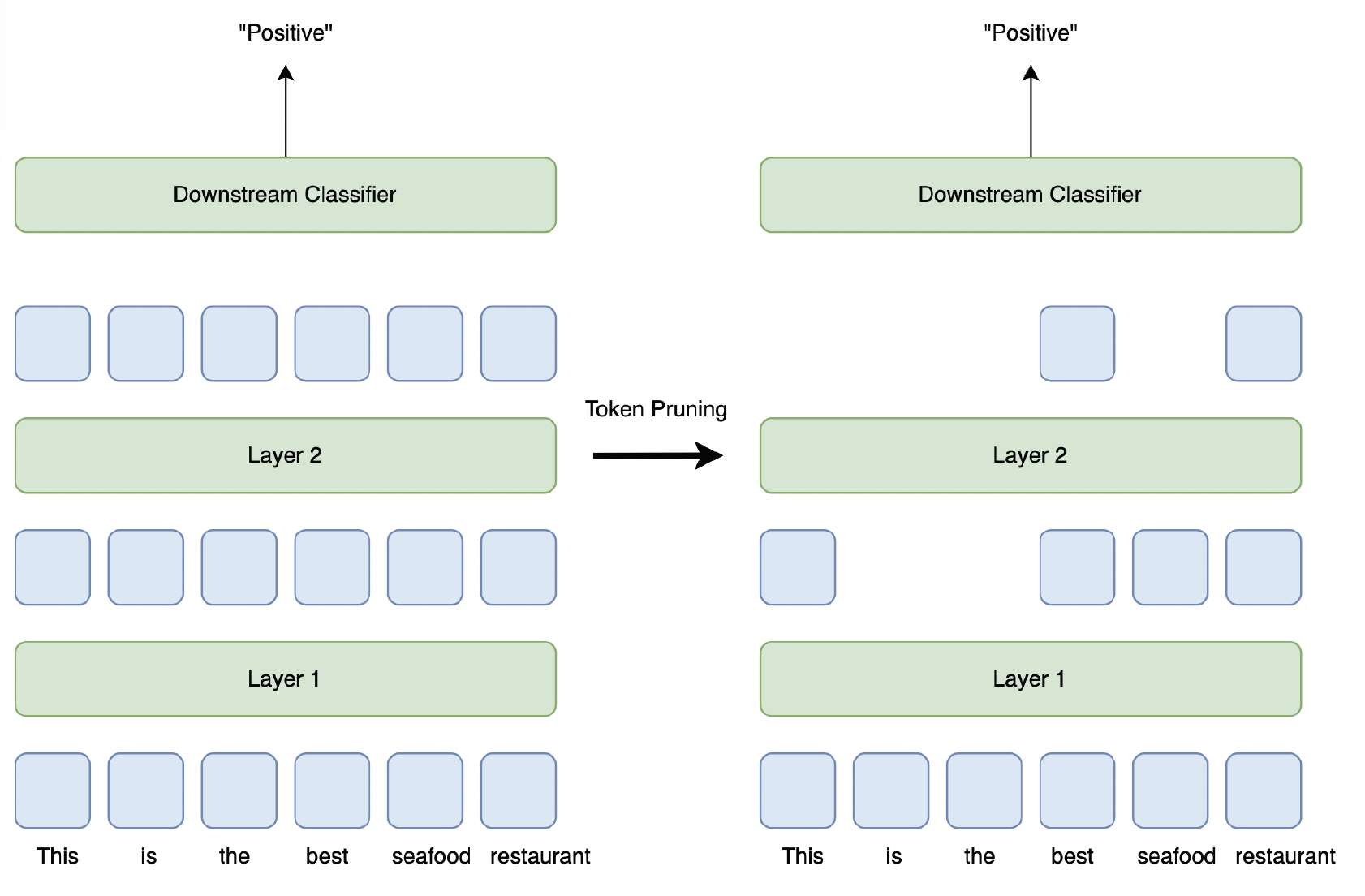

Constraint-aware and Ranking-distilled Token Pruning for Efficient Transformer Inference

Junyan Li, Li Lyna Zhang, Jiahang Xu, Yujing Wang, Shaoguang Yan, Yunqing Xia, Yuqing Yang, Ting Cao, Hao Sun, Weiwei Deng, Qi Zhang, Mao Yang

GitHub |

Poster |

- ToP is a deployment friendly token pruning solution for Transformers.

🎖 Honors and Awards

- 2022.11 Zhejiang Provincial Scholarship

- 2022.11 Zhejiang University Second Scholarship

- 2020.11 Zhejiang University Second Scholarship

📖 Educations

- 2023.09 - (now), PhD, computer science, University of Massachusetts Amherst.

- 2019.09 - 2023.06, Undergraduate, computer engineering, University of Illinois Urbana-Champaign.

- 2019.09 - 2023.06, Undergraduate, computer engineering, Zhejiang University.

💻 Internships

- 2025.06 - 2025.08, Apple Inc., Seattle, USA

- 2024.06 - 2024.08, Toyota Research Institute, Los Altos, USA

- 2023.05 - 2023.08, NVIDIA, Shanghai, China.

- 2022.08 - 2023.02, Microsoft Research Lab - Asia, Beijing, China.

- 2021.06 - 2021.08, Momenta, Beijing, China.